In case you’re among the many people who have been casually wondering whether the advent of artificial intelligence might usher in the end of the world as you know it, there was some good news this week. Two of the senior executives at OpenAI, the company that brought us ChatGPT, announced that they are “doubling down” on their efforts to install permanent guardrails that will keep the technology “safe for humans.” In doing so, when (not if) the system reaches the level of Superintelligent AI, they hope to prevent it from “going rogue” and doing something unpleasant like murdering all of the humans or turning us into slaves. Don’t you feel better already? (Reuters)

ChatGPT’s creator OpenAI plans to invest significant resources and create a new research team that will seek to ensure its artificial intelligence remains safe for humans – eventually using AI to supervise itself, it said on Wednesday.

“The vast power of superintelligence could … lead to the disempowerment of humanity or even human extinction,” OpenAI co-founder Ilya Sutskever and head of alignment Jan Leike wrote in a blog post. “Currently, we don’t have a solution for steering or controlling a potentially superintelligent AI, and preventing it from going rogue.”

Superintelligent AI – systems more intelligent than humans – could arrive this decade, the blog post’s authors predicted. Humans will need better techniques than currently available to be able to control the superintelligent AI, hence the need for breakthroughs in so-called “alignment research,” which focuses on ensuring AI remains beneficial to humans, according to the authors.

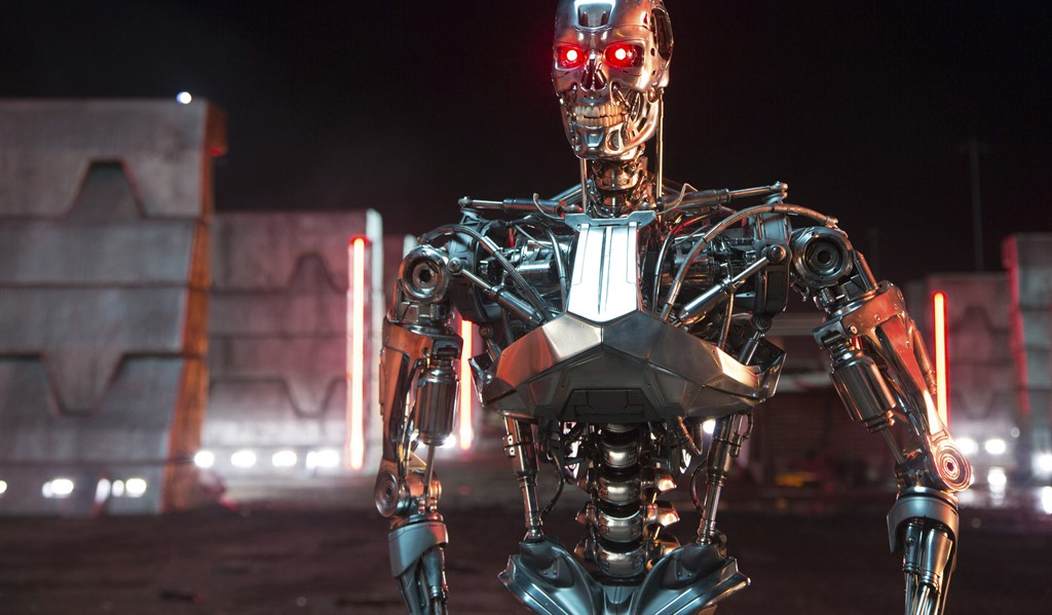

Before getting to the details of the announcement and what it might mean, let’s briefly review the levels of artificial intelligence under discussion. First, there is Narrow AI. That’s where we are now. The system can behave in what appears to be an intelligent fashion for limited purposes defined by the creators. Then we eventually reach General AI. That’s when the system is at least as smart as a human being and can begin expanding its horizons. Some in the industry argue that we are already there, but they can’t be sure. (That’s comforting, right?) Then, finally, we get to Super AI. That would be a system vastly “smarter” than any human brain. We’re seriously getting into SKYNET territory at that point.

The experts working in this field used to tell people not to worry because it would be a long time before we ever reach Artificial Super Intelligence if it’s even possible to achieve it. But now, in this week’s announcement, the creators of ChatGPT are casually saying that we could reach Super AI levels in this decade. That’s just around the corner, so something has changed.

The announcement contains some other potentially disturbing suggestions. First of all, the purpose of this new design push, according to the authors is to make sure the AI “remains safe for human.” Excuse me for sticking my nose into your company’s business, but if you’re working toward that purpose, doesn’t that imply that you’re not entirely sure that it is or will remain safe for humans? And if it’s not, don’t you suppose that perhaps you should stop and take a step back?

Even if they do get some guardrails like this in place (or at least believe they’ve done so), you may be wondering how people with clunky old human brains can possibly supervise something so vastly smarter and faster than us. Never fear. They’ve thought of that already also. They’re designing the guardrails so the system “will be able to supervise itself.” Seriously? I’m assuming neither of you have ever watched Space Odyssey.

I’m not talking about hysterics or science fiction here. I’m simply repeating what the inventors of this technology are saying themselves. In this week’s announcement, the two OpenAI executives wrote that these guardrails are required because Super AI could (and this is a direct quote) “lead to the disempowerment of humanity or even human extinction.” Does everyone honestly believe that they have this under control? Or are we just sitting by while someone hands sticks of dynamite to a group of chimpanzees? I’m growing increasingly concerned that it might be the latter.

Join the conversation as a VIP Member